(Material written by: Kostis Konstantoudakis and Eleana Almaloglou)

Artificial Intelligence (AI), and in particular Deep Learning, have made huge advances in recent years and form an increasing part of many aspects of everyday life. One of the most spectacular aspects of AI is the ability to create original content, including text (e.g. ChatGPT, Bard), images (e.g. DALL·E, Midjourney), and sound (e.g. MuseNet). However, apart from original content, AI tools can also manipulate existing content, for reasons that may be benign or less so.

What are they?

Deepfakes are media – images, videos, or sound clips – that have been manipulated by deep learning algorithms. Their goal is to alter the identity or perceived actions of the people depicted on the manipulated media – showing people doing or saying things they never did. Deepfakes are always based on two real media, combining and mixing them to achieve the desired result: e.g. replacing someone’s face with a different one, or applying someone’s voice on words spoken by someone else.

Most commonly, deepfakes are visual media: images or video; so, this is where we’ll focus in this article.

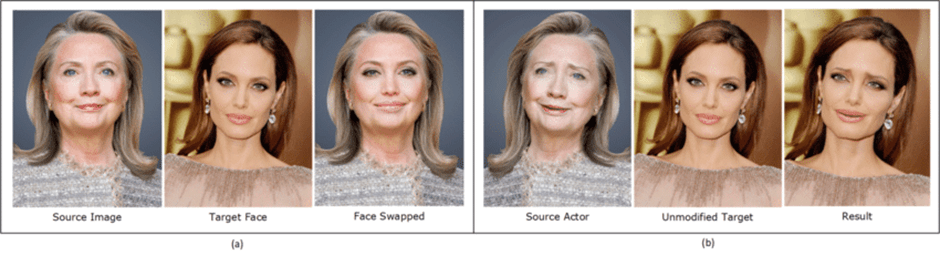

Two common types of deepfake images are:

- Face swapping, which modifies the identity of the target person, in effect swapping their face for the face of another person (the source), while maintaining the expressions of the target.

- Facial reenactment is in many ways the opposite: it maintains the identity of the target person, but imposes on it the expressions of the source.

Going from image to video is easy, as videos are but a sequence of images:

The video above is, of course, meant to be humorous. It is obviously a fake and, even if it’s a very good one, no one would ever mistake it for real. However, AI able to create such realistic fakes may also be used for less innocent purposes.

How can deepfakes be a threat?

Deepfakes, then, can produce realistic images and videos that show individuals performing actions or saying things they never did. As deep learning techniques become more advanced with each year, deepfakes are getting less easy to detect, and can fool even wary observers.

Criminals and fraudsters can use this for a wide variety of activities, such as:

- Scamming relatives of the victim for money or personal data, e.g. by posing as the victim or showing the victim in situations requiring financial help;

- Scamming business associates by requesting specific business-oriented actions;

- Blackmail, e.g. threatening to circulate photos or videos of the victim in compromising situations;

- Damaging the reputation of the victim, showing them doing or saying things that present them in a negative way;

- Manipulating the public opinion, spreading fake news via deepfakes of public figures;

- Spreading propaganda by showing public figures in compromised situations;

- And more.

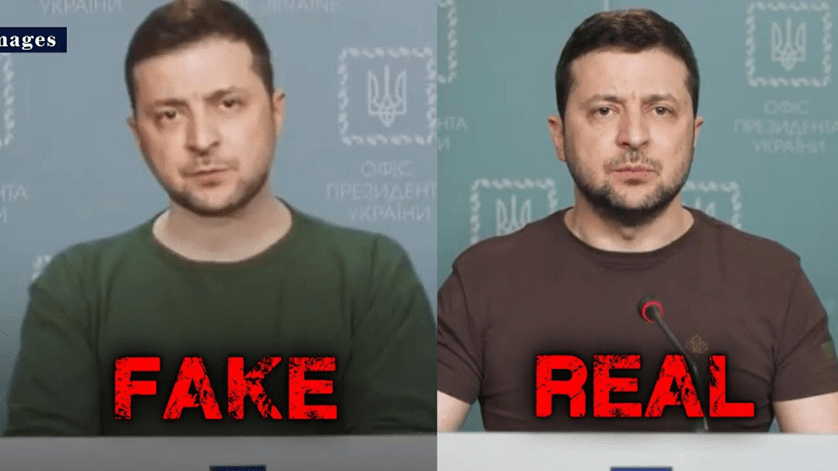

a high-profile recent example: In 2022, a video of President Zelenskyy was circulated in the media where he was seemingly urging Ukrainian soldiers to lay down their weapons. The video was quickly proven a deepfake. The numerous public appearances of Zelenskyy made the unnaturalness of the deepfake very apparent. However, the possible impact on army morale and international politics serves to show the extend of the scale of damage this technology can cause.

Figure 2: Deepfake and real image of president Zelenskyy [3]

References

- Masood, M., Nawaz, M., Malik, K. M., Javed, A., Irtaza, A., & Malik, H. (2023). Deepfakes Generation and Detection: State-of-the-art, open challenges, countermeasures, and way forward. Applied Intelligence, 53(4), 3974-4026.

- crookedpixel: J’adore starring Mr Bean [DeepFake], https://www.youtube.com/watch?v=tDAToEnJEY8

- Inside Edition: Deepfake of Zelenskyy Tells Ukrainian Troops to ‘Surrender’, https://www.youtube.com/watch?v=enr78tJkTLE